This article explains how to set up (configure and manage) RAID 0, 1, 5 and 10 in CentOS, RedHat and UBUNTU/Debian.

Contents:

- RAID ?

- Set up RAID 0

- Set up RAID 1 :

- Set up RAID 5 :

- Set up RAID 10 :

1- RAID?

RAID (Redundant Array of Independent Disks, originally redundant array of inexpensive disks) is a storage technology that combines multiple disk drive components into a logical unit for the purposes of data redundancy and performance improvement, for more information.

Note 1: To manage the RAID with Linux, you should install raid manager (the manage MD devices aka Linux Software RAID)

- Verify the name of the RAID manager.

1- CentOS and RedHat:

# yum search raid ... mdadm.i686 : The mdadm program controls Linux md devices (software RAID arrays) ...

2- UBUNTU and Debian:

# apt-cache search raid ... mdadm - tool to administer Linux MD arrays (software RAID) ....

- Install the mdadm (RAID Manager)

1- CentOS and RedHat:

# yum install mdadm

2- UBUNTU and Debian:

# apt-get install mdadm

Note 2: After configuration, if you want to make a permanent raid configuration through reboot.

# mdadm --detail -- scan >> /etc/mdadm.conf

2- Set up RAID 0:

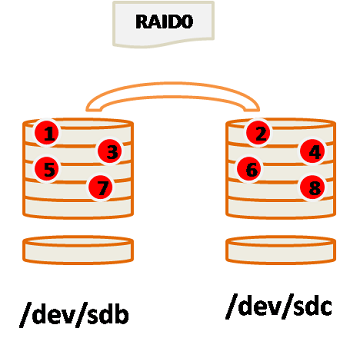

RAID 0 (block-level striping without parity or mirroring) has no (or zero) redundancy. It provides improved performance and additional storage but no fault tolerance. Any drive failure destroys the array, and the likelihood of failure increases with more drives in the array, for more information.

RAID 0 (block-level striping)

I have two disks /dev/sdb and /dev/sdc with 1Gb each one.

- Verify the disks

# fdisk -l /dev/sdb Disk /dev/sdb: 1073 MB, 1073741824 bytes 255 heads, 63 sectors/track, 130 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdb doesn't contain a valid partition table

# fdisk -l /dev/sdc Disk /dev/sdc: 1073 MB, 1073741824 bytes 255 heads, 63 sectors/track, 130 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdc doesn't contain a valid partition table

- Create a RAID 0 (level 0) Disk (/dev/md0) with the disks /dev/sdb and /dev/sdc

# mdadm --create /dev/md0 --level=0 --raid-devices=2 /dev/sdb /dev/sdc

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

- Verify the creation of the RAID 0 disk.

# mdadm --detail --scan ARRAY /dev/md0 metadata=1.2 name=rhel:0 UUID=7b5ee535:1e1b850e:773566d3:7752d175

- Verify also with RAID file under the proc directory

# cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 sdc[1] sdb[0]

2095104 blocks super 1.2 512k chunks

unused devices: <none>

You can use LVM with /dev/md0 or you can create a partition with the command fdisk.

# fdisk /dev/md0

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to switch off the mode (command 'c') and change display units to sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-523776, default 257):

Using default value 257

Last cylinder, +cylinders or +size{K,M,G} (257-523776, default 523776):

Using default value 523776

Command (m for help): t

Selected partition 1

Hex code (type L to list codes): fd

Changed system type of partition 1 to fd (Linux raid autodetect)

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

- To verify the size of the RAID 0 disk

# fdisk -l /dev/md0 Disk /dev/md0: 2145 MB, 2145386496 bytes 2 heads, 4 sectors/track, 523776 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0xd2d27810 Device Boot Start End Blocks Id System /dev/md0p1 257 523776 2094080 fd Linux raid autodetect

- Create a file system on the first partion on the RAID 0 device

# mkfs.ext3 /dev/md0p1

3- Set up RAID 1:

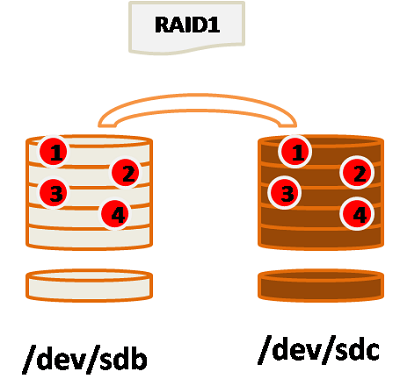

In RAID 1 (mirroring without parity or striping), data is written identically to two drives, thereby producing a “mirrored set”, for more information.

RAID 1 (mirroring)

- I have two disks /dev/sdb and /dev/sdc with 1Gb each one.

# mdadm --create /dev/md1 --level=1 --raid-devices=2 /dev/sdb /dev/sdc mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md1 started.

- Verify the creation

# mdadm --detail --scan ARRAY /dev/md1 metadata=1.2 name=rhel:1 UUID=479fa3ed:c2317ac4:7a104152:6e96823e

- Verify also with RAID file under the proc directory

# cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdc[1] sdb[0] 1048564 blocks super 1.2 [2/2] [UU] unused devices: <none>

- You can use LVM with /dev/md1 or you can create a partition with the command fdisk.

# fdisk /dev/md1

- To verify the size of the RAID 1 disk

# fdisk -l /dev/md1 Disk /dev/md1: 1073 MB, 1073729536 bytes 2 heads, 4 sectors/track, 262141 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xc842f68b Device Boot Start End Blocks Id System /dev/md1p1 1 262141 1048562 fd Linux raid autodetect

4- Set up RAID 5:

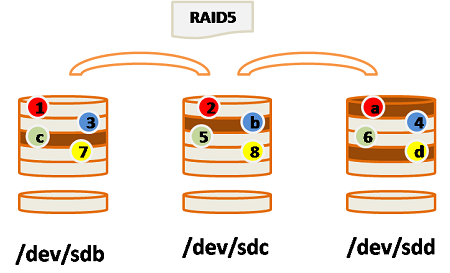

RAID 5 (block-level striping with distributed parity) distributes parity along with the data and requires that all drives but one be present to operate. The array is not destroyed by a single drive failure. On drive failure, any subsequent reads can be calculated from the distributed parity such that the drive failure is masked from the end user. RAID 5 requires at least three disks, for more information.

RAID 5 (block-level striping with distributed parity)

RAID 5 (block-level striping with distributed parity)

I have three disks /dev/sdb, /dev/sdc and /dev/sdd with 1Gb each one.

# mdadm --create /dev/md5 --level=5 --raid-devices=3 /dev/sdb /dev/sdc /dev/sdd

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

- Verify also with RAID file uder the proc directory

mdadm --detail --scan ARRAY /dev/md5 metadata=1.2 name=rhel:5 UUID=9e7113b6:2d5dc78d:db553d53:d6cc4555

- Verify the state of the RAID 5 disk with /dev/mdstat

# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md5 : active raid5 sdd[3] sdc[1] sdb[0] 2096128 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU] unused devices: <none>

- Create a partition on the RAID5 disk

# fdisk /dev/md5

- Verify the disk size

# fdisk -l /dev/md5 Disk /dev/md5: 2146 MB, 2146435072 bytes 2 heads, 4 sectors/track, 524032 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0x16b481fd Device Boot Start End Blocks Id System /dev/md5p1 257 524032 2095104 fd Linux raid autodetec

- Add the disk /dev/sde to RAID5, so the RAID disk will be with 4 disks.

# mdadm --manage /dev/md5 --add /dev/sde mdadm: added /dev/sde

- Verify the new state of the RAID disk

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md5 : active raid5 sde[4](S) sdc[1] sdd[3] sdb[0]

2096128 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

- When we verify the new size, no changement

# fdisk -l /dev/md5 Disk /dev/md5: 2146 MB, 2146435072 bytes 2 heads, 4 sectors/track, 524032 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0x16b481fd Device Boot Start End Blocks Id System /dev/md5p1 257 524032 2095104 fd Linux raid autodetect

Note: The size is not increased!!

To increase the size, you shoud use the command mdadm with raid-devices or with max size options.

# mdadm --grow /dev/md5 --raid-devices=4 mdadm: Need to backup 3072K of critical section..

or

# mdadm --grow /dev/md5 –size max

- to verify the reshape of the raid disk. (you can use the command watch for periodically showing the output with fullscreen: watch cat /proc/mdstat)

# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md5 : active raid5 sde[4] sdc[1] sdd[3] sdb[0] 2096128 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU] [==>..................] reshape = 14.7% (154948/1048064) finish=4.5min speed=3265K/sec unused devices: <none>

- You should wait…. When the reshape finish:

# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md5 : active raid5 sde[4] sdc[1] sdd[3] sdb[0] 3144192 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU] unused devices: <none>

- To verify the size of the disk /dev/md5

# mdadm --detail /dev/md5 /dev/md5: Version : 1.2 Creation Time : Sat Nov 2 00:06:53 2013 Raid Level : raid5 Array Size : 3144192 (3.00 GiB 3.22 GB) Used Dev Size : 1048064 (1023.67 MiB 1073.22 MB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Sat Nov 2 00:25:03 2013 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 512K Name : rhel:5 (local to host rhel) UUID : 9e7113b6:2d5dc78d:db553d53:d6cc4555 Events : 64 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 3 8 48 2 active sync /dev/sdd 4 8 64 3 active sync /dev/sde

- Also you can verify the size with fdisk command:

# fdisk -l /dev/md5 Disk /dev/md5: 3219 MB, 3219652608 bytes 2 heads, 4 sectors/track, 786048 cylinders Units = cylinders of 8 * 512 = 4096 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0x16b481fd Device Boot Start End Blocks Id System /dev/md5p1 257 524032 2095104 fd Linux raid autodetect

- For Troubleshooting (Remove a disk from the RAID 5 disk ):

# mdadm --manage /dev/md5 --remove /dev/sdc

- To stop RAID

# mdadm -S /dev/md5 # mdadm --zero-superblock /dev/sdb # mdadm --zero-superblock /dev/sdc # mdadm --zero-superblock /dev/sdd

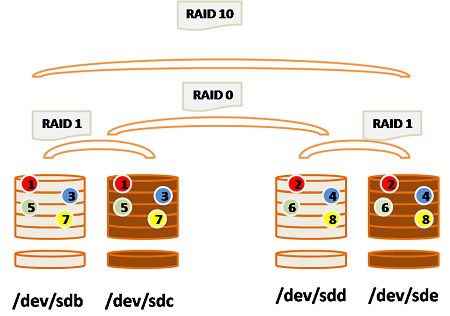

5- Set up RAID 10:

A RAID 1+0, sometimes called RAID 1&0 or RAID 10, is similar to a RAID 0+1 with exception that the RAID levels used are reversed — RAID 10 is a stripe of mirrors, for more information.

RAID 10 (RAID 1&0)

I have four disks /dev/sdb, /dev/sdc, /dev/sdd and /dev/sde with 1Gb each one.

# mdadm --create /dev/md10 --level=10 --raid-devices=4 /dev/sdb /dev/sdc /dev/sdd /dev/sde mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md10 started.

- To verify with the file mdstat

# cat /proc/mdstat Personalities : [raid10] md10 : active raid10 sde[3] sdd[2] sdc[1] sdb[0] 2096128 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU] unused devices: <none>

- To verify the size

# mdadm --detail /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Sat Nov 2 00:43:34 2013

Raid Level : raid10

Array Size : 2096128 (2047.34 MiB 2146.44 MB)

Used Dev Size : 1048064 (1023.67 MiB 1073.22 MB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Nov 2 00:44:11 2013

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Name : rhel:10 (local to host rhel)

UUID : 43a7f30a:1f113564:2f264831:ccfb1d07

Events : 17

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

2 8 48 2 active sync /dev/sdd

3 8 64 3 active sync /dev/sde

Conclusion:

Configure RAID 0, 1, 5 and 10 in CentOS, RedHat and UBUNTU/Debian.